Evaluating Text Extraction Algorithms

09 Jun 2011UPDATE 11/6/2011: Added the summary and the results table

Lately I've been working on evaluating and comparing algorithms, capable of extractinguseful content from arbitrary html documents. Before continuing I encourage you to pass trough some of my previous posts, just to get a better feel of what we're dealing with; I've written a short overview, compiled a list of resources if you want to dig deeper and made a feature wise comparison of related software and APIs.

Summary

- The evaluation environment presented in this post consists of 2 datasets with approximately 650 html documents.

- The gold standard of both datasets was produced by human annotators.

- 14 different algorithms were evaluated in terms of precision, recall and F1 score.

- The results have show that the best opensource solution is the boilerpipe library.

- Commercial APIs included in the evaluation environment produced consistent results on both datasets. Diffbot and Repustate API performed best, while others follow very closely.

- Readability's performance is surprisingly poor and lacking consistency between both ports that were put to use in the evaluation setup.

- Further work will include: adding more APIs and libraries to the setup, working on a new extraction technique and assembling a new dataset.

Evaluation Data

My evaluation setup consists of 2 fairly well known datasets:

- The final cleaneval evaluation dataset, with 681 documents (created by the ACL web-as-corpus community).

- Google news dataset, with 621 documents. (harvested by the authors of the boilerpipe library)

The former was harvested from all sorts of web pages, including: articles, blog posts, mailing list archives, forum conversations, dedicated form submission pages etc. The latter was gathered by scraping the google news stream for a longer period of time (cca 6 months). The random sample that became the final dataset of 621 documents from 408 different news type web sites, came from a larger set of 250k documents assembled during the scrapping process.

From their description and related documentation we can conclude that they represent 2 slightly distinctive domains: the google news dataset represents a specific domain of news articles and the cleaneval dataset represents a cross domain collection of documents.

The gold standard counterpart of both datasets was created by human annotators who assessed each document individually. Annotators who assembled the cleaneval gold standard, first used lynx text based browser to filter out all the non-text building blocks. Each annotator than cleaned out the redundant non-content text using his own visual interpretation of the website. On the other hand, annotators of the google news dataset inserted additional span tags (using class names as labels) into the original html document. The class names of span tags indicate the following content labels: headline, main text, supplemental text, user comments, related content. In my experimental setup I use only the content labeled under headline and main text as the gold standard.

As you might have noticed, these datasets are not as large as we would like them to be and there is a fairly good reason behind that: assessing and labeling html documents for content is a tedious task. So was the preprocessing of the cleaneval dataset on my part. The methodology of storing raw html documents for the cleaneval shared task included inserting a special <text> tag around the whole html markup to hold some meta data about the document itself. I had to remove these tags from all documents and insert the basic <html> and <body> tags to those who were obviously stripped of some starting and trailing boilerplate html markup. The reasoning of inserting such tags back into the raw html document is based on the nature of particular extraction software implementations which assume the existence of such tags.

Metrics

I'm using precision, recall and f1 score to evaluate the performance. I covered these in one of my previous posts, but here is a short recap:

Definitions:

Given an HTML document we predict/extract/retrieve a sequence of words and denote them as Sret. Consequentially the sequence of words representing relevant text is denoted as Srel .

Both sequences are constructed by the following procedure:

- Remove any sort of remaining inline html tags

- Remove all punctuation characters

- Remove all control characters

- Remove all non ascii characters (due to unreliable information of the document encoding)

- Normalize to lowercase

- Split on whitespace

The intersection of retrieved and the relevant sequence of words is calculated using the Ratcliff-Obershelff algorithm (python difflib).

As we're dealing with raw data, we have to account for 4 special cases of these metrics:

| Precision | Recall | F1-score | Case |

| 0 | 0 | inf | Missmatch - both retrieved and relevant sequences are not empty, but they don't intersect. |

| inf | 0 | nan | Set of retrieved words is empty - the extractor predicts that the observed document does not contain any content. |

| 0 | inf | nan | Set of relevant words is empty - the document itself does not contain anything useful. |

| inf | inf | nan | Both retrieved and relevant set is empty - the document does not contain nothing useful and the extractor comes back empty handed. |

Results

Currently my evaluation setup includes the following content extractors that were either already reviewed or mentioned in my preceding blog posts, so I won't get into the details of every single one in the context of this writeup:

- Boilerpipe - using two similar variants; the default extractor and the one especially tuned for article type of content.

- NCleaner - again using two of its variants; the non lexical n-gram character language independat model and the model trained on english corpora.

- Readability - using its python port and readability ported to node.js on top of jsdom.

- Pasternack & Roth algorithm (MSS) - authors provided me with access to the implementation presented in the www2009 paper.

- Goose - using my fork to expose the core content extraction functionality.

- Zextractor - internally used service developed by my friends/mentors at Zemanta.

- Alchemy API - using their text extraction API.

- Extractiv - using their semantic on demand RESTul API.

- Repustate API - using the clean html API call.

- Diffbot - using the article API.

Admittedly they are not all specialized for solving problems in a specific domain, say news article content extraction, but they all share one common property: they're capable of distinguishing useful textual content apart from boilerplate and useless content when observing an arbitrary html document.

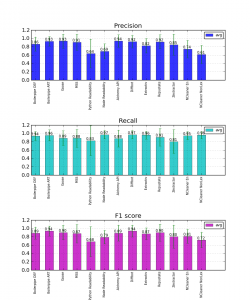

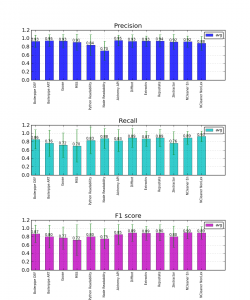

Results were obtained by calculating precision and recall for each document in the dataset for every content extractor. Then we calculate the arithmetic mean of all three metrics for every extractor. Bar charts are employed to visualize the results.

| Cleaneval→ | precision | recall | f1 score |

| Boilerpipe DEF | 0.931 | 0.856 | 0.872 |

| Boilerpipe ART | 0.949 | 0.764 | 0.804 |

| Goose | 0.934 | 0.719 | 0.770 |

| MSS | 0.911 | 0.699 | 0.718 |

| Python Readability | 0.841 | 0.833 | 0.803 |

| Node Readability | 0.704 | 0.878 | 0.753 |

| Alchemy API | 0.950 | 0.828 | 0.854 |

| Diffbot | 0.932 | 0.890 | 0.891 |

| Extractiv | 0.935 | 0.871 | 0.887 |

| Repustate | 0.940 | 0.889 | 0.896 |

| Zextractor | 0.916 | 0.763 | 0.800 |

| NCleaner En | 0.923 | 0.895 | 0.897 |

| NCleaner NonLex | 0.882 | 0.927 | 0.892 |

| Google news→ | precision | recall | f1 score |

| Boilerpipe DEF | 0.863 | 0.938 | 0.887 |

| Boilerpipe ART | 0.931 | 0.955 | 0.939 |

| Goose | 0.934 | 0.887 | 0.901 |

| MSS | 0.907 | 0.882 | 0.875 |

| Python Readability | 0.638 | 0.825 | 0.681 |

| Node Readability | 0.688 | 0.968 | 0.789 |

| Alchemy API | 0.936 | 0.876 | 0.888 |

| Diffbot | 0.924 | 0.968 | 0.935 |

| Extractiv | 0.824 | 0.956 | 0.870 |

| Repustate | 0.917 | 0.907 | 0.898 |

| Zextractor | 0.850 | 0.806 | 0.803 |

| NCleaner En | 0.742 | 0.947 | 0.812 |

| NCleaner NonLex | 0.613 | 0.959 | 0.723 |

Next we explore the distribution of per document measurements. Instead of just calculating the mean of precision, recall and F1 score we can explore the frequencies of per document measurements. In the following chart we visualize frequencies for each metric for all extractors. Notice that we're splitting the [0,1] interval of each metric into 20 bins with equidistant intervals.

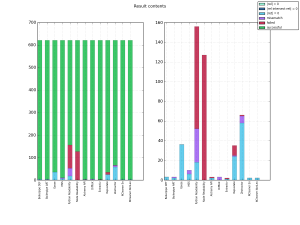

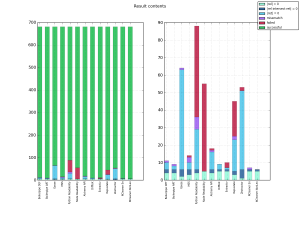

To account for previously mentioned 4 special cases, we employ a stacked bar chart to inspect the margins of useful/successful per document measurements for every dataset.

Special cases of per document measurements - Cleaneval dataset

|

Special cases of per document measurements - Google news dataset |

Every per document measurement can fall into one of the "special cases" category, "successful" category, or an extra "failed" category. The latter stands for measurement instances that failed due to: parsing errors, implementation specific errors, unsupported language errors etc. The right hand side of both figures depicts the same categories without the "successful" part.

This chart is important, because we only make use of measurements who fall under the "successful" category to obtain the distribution and mean for each metric, respectively.

Observations

The foremost observation is the varying performance of NCleaner outside the cleaneval domain, since both variants seem to perform poorly on the google news collection. The cause of such behavior might be the likelihood that NCleaner was trained on the cleaneval corpus.

Readability's poor performance came as a surprise, moreover, its varying results between both ports. Relatively low precision and high recall indicate that readability tends to include large portions of useless text in its output. I'm not quite satisfied of how readability is represented in this experiment as I'm not making use of the original implementation. The node.js port seems not to differ from the original as much as the python port, but I still worry I'll have to use the original implementation on top of a headless webkit browser engine like PhantomJS, to get a better representation.

Notice the consistent performance of commercial APIs (and zemanta's internal service), Alchemy API and Diffbot in particular. According to diffbot's co-founder Michael Tung, their article API is only a small portion of their technology stack. They're using common visual features of websites to train models in order to gain the understanding of various types of web pages (forum threads, photo galleries, contact info pages and about 50 other types). The article API used in this setup is just an additionally exposed model, trained on visual features of article type pages. On the other hand, zextractor (zemanta's internal service) leverages on libsvm to classify atomic text blocks of the newly observed document.

What I find especially interesting are the bimodal distributions of precision and/or recall for MSS and readability's python port. I suspect that they're failing to produce relevant content on really big documents or they're capable of extracting only a limited amount of content. It'll be interesting to explore this phenomena with some additional result visualizations.

Conclusion and Further Work

According to my evaluation setup and personal experience, the best open source solution currently available on the market is the boilerpipe library. If we treat precision with an equal amount of importance as recall (reflected in the F1 score) and take into account the performance consistency across both domains, then boilerpipe performs best. Performance aside, its codebase is seems to be quite stable and it works really fast.

If you're looking for an API and go by the same criteria (equal importance of precision and recall, performance consistency across both domains), diffbot seems to perform best, although alchemy, repustate and extractiv are following closely. If speed plays a greater role in your decision making process; Alchemy API seems to be a fairly good choice, since its response time could be measured in tenths of a second, while others rarely finish under a second .

My further work will be wrapped around adding new software to my evaluation environment (I was recently introduced to justext tool), compiling a new (larger) dataset and working on my own project, that'll hopefully yield some competative results.

Stay tuned for I'll be releasing all the data and code used in this evaluation setup in the near future.