Overview: Extracting article text from HTML documents

02 Mar 2011In the world of web scraping, text mining and article reading utilities (readability bookmarklet) there is an ever growing demand for utilities that are capable of distinguishing parts of a HTML document which represent an article apart from other common website building blocks like menus, headers, footers, ads etc.

Turns out this problem was baffling researchers since the early 00s. Keep in mind, that back then, folks were still making websites with microsoft frontpage. The majority of methods presented in research papers from that epoch are nowadays useless due to strong assumptions and heuristics that don’t apply on today’s web development practices.

In the following chapters I’ll try to review some article text extraction methods that are applicable to today’s websites. They mostly leverage on machine learning, statistics and a wide rage of heuristics.

Boilerpipe library: Boilerplate Removal and Fulltext Extraction from HTML pages

Boilerpipe is probably one of the best open source packages when it comes to full article text extraction that leverages on machine learning. The overall algorithm works by computing both text and structural features on parts of the document and based on these features, decide if the observed part of the document belongs to the article text or not. The decision rules are inferred by a classifier trained on a set of labeled documents.

To obtain a set of learning examples that are fed into a classifier, boilerplate observes a list of features on different levels of the HTML document:

- text frequency in the whole corpus: to obtain phrases commonly used in useless parts of the document

- presence of particular tags that enclose a block of text: <h#> headline, <p> paragraph, <a> anchor and <div> division

- shallow text features: average word length, average sentence length, absolute number of words in the segment

- local context of text: absolute and relative position of the text block

- heuristic features: number of words that start with an uppercase letter, number of words written in all-caps, number of date and time tokens, link density and certain ratios of those previously listed

- density of text blocks: number of words in a wrapped fixed column width text block divided by number of lines of the same block

Each HTML document is segmented into atomic text blocks that are annotated with features listed above and labeled (by a human annotator) with content or boilerpate class.

In the original paper authors used decision trees and SVMs, trained on previously mentioned set of learning examples, to classify parts of the newly observed document as content or boilerplate text.

Extracting Article Text from the Web with Maximum Subsequence Segmentation

The overall algorithm (MSS) transforms the problem of detecting article text in HTML documents to maximum subsequence optimization.

Let’s start of by examining maximal subsequence optimization and it’s problem statement. Given a sequence of numbers, we've been given a task of finding a continuous subsequence where the sum of it's elements is maximal. This is trivial if the elements of the sequence are all non-negative. In other words:

In terms of time complexity this can easily be computed in O(n), where n stands for number of elements in the original sequence.

Now let’s take a look at the representation of documents used in MSS. Each document is tokenized using the following procedure:

- discard everything between <script> and <style> tags

- break up HTML into a list of tags, words and numbers

- apply porter stemming to all words

- generalize numeric tokens

To apply maximum subsequence optimization to the particular representation of the document, MSS uses local token-level classifiers to find a score for each token of the document. A negative score indicates that the observed token is not likely to be considered as content and vica-versa for tokens with positive scores. Because MSS uses a global optimization over all scores, there is no need for highly accurate local classifiers.

Experimental setup in the original paper uses Naive Bayes with 2 types of features for every labeled token in the document:

- trigram of token: the token itself and its 2 successors

- parent tag of token in the DOM tree (this can easily be implemented by maintaining a stack of tags when passing through the token array)

Each score produced by the NB classifier is than transformed with f(p) = p - 0.5 to obtain a sequence of scores ranging from [-0.5, 0.5].

This is basically it; local token-level classifier outputs a sequence of scores to which we apply maximum subsequence optimization. Indexes of the subsequence are used to result the set of tokens that represent the extracted content.

Text Extraction from the Web via Text-to-Tag Ratio

If MSS transformed the problem of article text extraction to maximum subsequence optimization; this approach transforms it to histogram clustering.

Clustering is applied to the text-to-tag ratio array (TTRArray) which is obtained by the following procedure:

- remove all empty lines and everything between <script> tags from the given HTML document

- initialize the TRRArray

- for each line in the document

- let x be the number of non-tag ASCII characters

- let y be the number of tags in that line

- if there are no tags in the current line than TTRArray[ current line ] = x

- else TTRArray[ current line ] = x/y

The resulting TTRArray holds a text-to-tag ratio for every line in the filtered HTML document.

Clustering can be applied to the TTRArray to obtain lines of text that represent the article by considering the following heuristic:

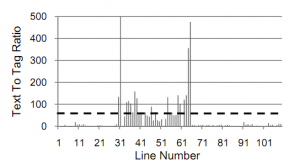

TTRArray (image courtesy of Weninger & Hsu: Text Extraction from the Web via Text-to-Tag Ratio)

For each k in TTRArray, the higher the TTR is for an element k relative to the mean TTR of the entire array the more likely that k represents a line of content-text within the HTML-page.

Prior to clustering, TTRArray is passed by a smoothing function to prevent the loss of short paragraph lines that might still be part of the content at the edges of the article.

Weninger & Hsu propose the following clustering techniques to obtain article text:

- K-means

- Expectation Maximization

- Farthest First

- Threshold clustering; using standard deviation as a cut-of threshold

VIPS: a Vision based Page Segmentation Algorithm

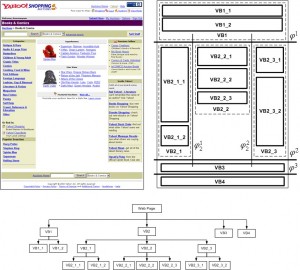

Semantic tree structure (source http://www.zjucadcg.cn/dengcai/VIPS/VIPS.html)

What’s distinctive about this algorithm from others mentioned in this post is making full use of the visual page layout features.

It first extracts blocks from the HTML DOM tree and assigns them a value that indicates the coherency of the block based on visual perception. A hierarchical semantic structure is then employed to represent the structure of the website. The algorithm applies vertical and horizontal separators to the block layout to construct such a semantic structure.

The constructed structure is then used to find parts of the website that represents article text and other common building blocks.

Readabillity

A perfect example of good ol’ hand crafted heuristics. Readability relies solely on common HTML coding practices to extract article text, title, corresponding images and even the next button if the article seems to be segmented into several pages.

Readability was originally developed as a JavaScript bookmarklet with a mission to mitigate the troubles of reading trough ad cluttered news websites. Readability is nowadays the most popular article text extraction tool.

Originally written and maintained in JavaScript it was also ported to python and ruby.

Conclusion

I admit I haven’t covered all the text extraction algorithms lurking in the wild, but hopefully this post will save some time for those of you who want to dive straight into sparse literature and software packages.

If you’ve been working on something similar or stumbled across a library that’s capable of full article text extraction from HTML documents, please drop me a line in the comments.

In my next post I’ll try to bundle up a repository of available open source packages and web services capable of text extraction, so keep in touch by subscribing to my RSS feed.